15 Sep A Beginner’s Guide to Successful Web Scraping

Amongst many other great things, the Internet gave humanity the free movement of public information. With so much data available at one click of a button, third parties that build businesses and develop products have a far greater chance of impacting the modern world. While a much higher level of competition might not benefit individuals, easily accessible resources stimulate exponential growth.

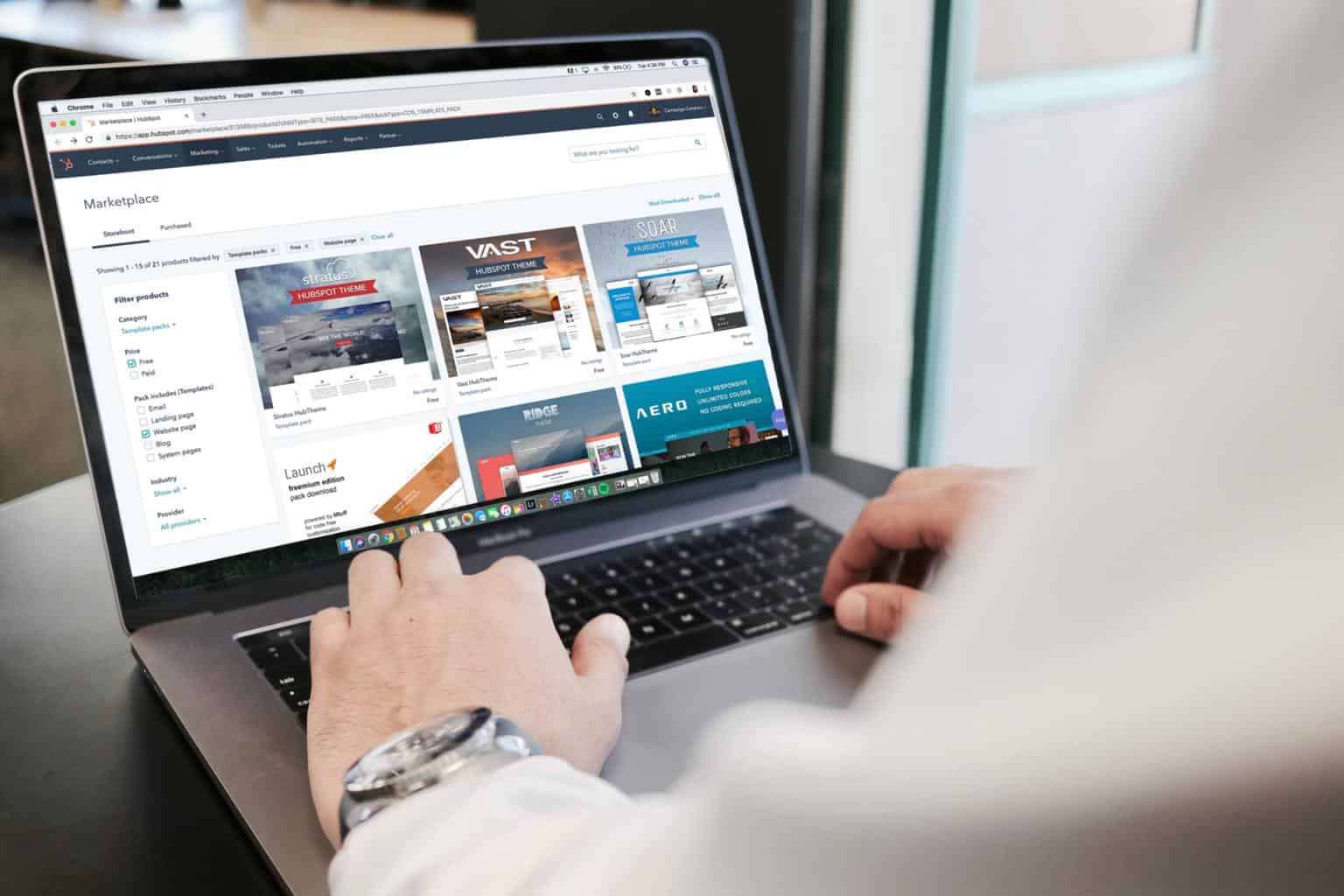

However, modern companies and ambitious individuals face a unique problem. There is so much information that the human mind cannot collect, store and process knowledge. Third parties that utilize digital tools and software stay ahead of the curve by outperforming competitors and spearheading valuable products. This is where web scraping plays a big part.

While the process itself might not be related to the activity that brings business its income, tech-savvy companies train or hire personnel to reap the benefits of data aggregation. Unique calibration of scraping bots and the necessity of protective tools like proxy servers separate beginner scrapers from successful data analytics teams.

While the process itself might not be related to the activity that brings business its income, tech-savvy companies train or hire personnel to reap the benefits of data aggregation. Unique calibration of scraping bots and the necessity of protective tools like proxy servers separate beginner scrapers from successful data analytics teams.

For example, datacenter proxies are great for web scraping as they are created in powerful data centers and are not associated with any ISP, which means that they are the faster type of proxies. But does that mean a datacenter proxy is the best server for your scraping needs? Let’s take a deeper look at web scraping to find out! This article will present the basic knowledge that you must know to succeed in web scraping.

Table of Contents

A Potential Career in Web Scraping

Colleges and Universities around the world create new programs that prioritize machine learning and data analytics. Because so many of our modern devices depend on communication and the transfer of information, web scraping is an inseparable part of the data science experience.

Colleges and Universities around the world create new programs that prioritize machine learning and data analytics. Because so many of our modern devices depend on communication and the transfer of information, web scraping is an inseparable part of the data science experience.

Today, Python is the most popular programming language in the world. Courses that teach students to use Python contain the basics of automated data extraction. Once you get familiar with the language, you will encounter free web scraping and parsing frameworks like Scrapy and BeautifulSoup.

There is no better learning experience with enough coding knowledge than applying acquired skills for personal scraping tasks. Using these tools will help you aggregate data and learn to solve the problems along the way.

Respect Others

A respectful approach to web scraping will help you collaborate with other third parties and avoid threats to your network identity. Beginner scrapers extract data from popular websites like Wikipedia to avoid the wrath of pages that use protection against scraping and DDoS attacks.

A respectful approach to web scraping will help you collaborate with other third parties and avoid threats to your network identity. Beginner scrapers extract data from popular websites like Wikipedia to avoid the wrath of pages that use protection against scraping and DDoS attacks.

While working on some projects, you will encounter websites with built-in API packages to give easier access to public data and avoid the server toll caused by scraping. Depending on the business model, some companies encourage third parties to collect their public data because its distribution can benefit it in the long run. In such situations, take a respectful approach and do not extract information with web scraping.

Start Using Proxy Servers

Web scraping operations send way more connection requests than an actual human user. Because the transfer of data packets already exposes your IP, data extraction makes you far more noticeable to competitors and other third parties. To avoid extensive load on the server and prevent public data aggregation, companies set up honeypots to feed fake data to a scraper or straight up blacklist the IP address.

Web scraping operations send way more connection requests than an actual human user. Because the transfer of data packets already exposes your IP, data extraction makes you far more noticeable to competitors and other third parties. To avoid extensive load on the server and prevent public data aggregation, companies set up honeypots to feed fake data to a scraper or straight up blacklist the IP address.

Every competitor would know and expose their network identity if a data analytics team ran web scrapers on their main IP. However, because every modern business extracts public data, none can survive without a reliable proxy provider. Different types of proxies serve other purposes.

Datacenter proxies are the fastest option, but what they give in speed, they lack protection. Because their IPs come from big data centers, third parties have an easy time recognizing them. To protect web scrapers and make them look like actual users, businesses choose residential proxies. Companies can choose from a big pool of IPs that come from real devices.

Running your web scrapers through a proxy has many benefits. Without them, you can never maximize the potential of your data extraction system. Even a single misstep can get your primary IP address banned. Proxy servers act like a safety net that helps you set up your web scrapers most safely and efficiently. If one scraper gets recognized and blacklisted, another IP will take its place to continue collection without interruptions.

Data extraction is a necessary skill in a modern business environment. The demand for it contributes to the birth of new companies specializing in information aggregation and data analysis to assist their clients. Even if you are not looking out for your own business, mastering web scraping may be a career choice for you! If you want to learn more about web scraping, try to study proxy servers simultaneously.

eslam mohmed

Posted at 05:54h, 19 SeptemberThe article is very good, thank you